ASONAM 2019

Marriott Downtown, Vancouver, Canada, 27-30 August, 2019

| Hours | Shaughnessy I | Shaughnessy II | Pinnacle I | Pinnacle II | Pinnacle III |

|---|---|---|---|---|---|

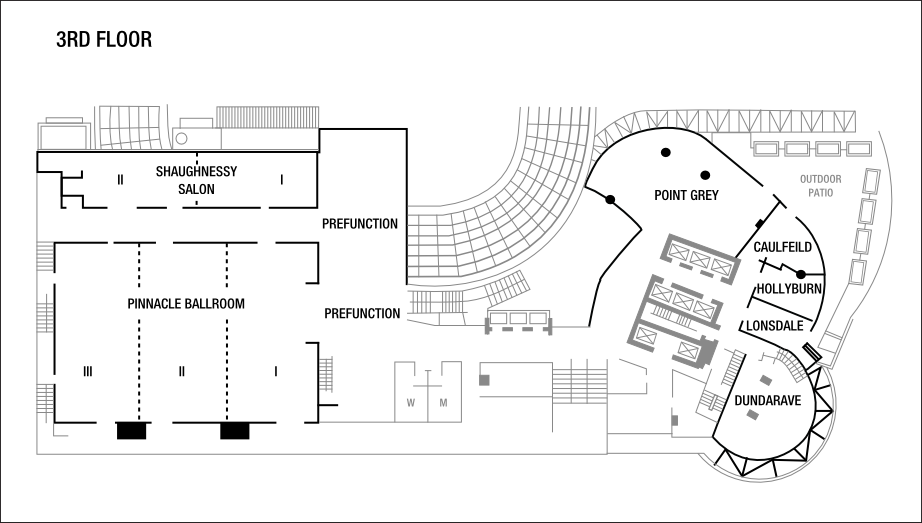

| 07:30 | Registration - (in the foyer outside of the Pinnacle Ballroom) | ||||

| 8:30-10:30 | READNet Workshop Session I Session Chair: Michele Coscia |

SI Workshop Session Session Chair: Jalal Kawash |

Tutorial I –Part I 8:30 -10:30 | ||

| 10:30-11:00 | |||||

| 11:00-12:30 | READNet Workshop Session II Session Chair: Andrea De Salve |

SNAST Workshop Session I Session Chair: Thirimachos Bourlai |

SNAA Workshop Session I Session Chair: Piotr Bródka |

Demo Track Session Session Chair: Tansel Ozyer |

Tutorial I. Part II. 11:00 – 11:30 Tutorial II. Part I. 11:30 – 12:30 |

| 12:30-14:00 | |||||

| 14:00-16:00 | MSNDS Workshop Session I Session Chair: Min-Yuh Day |

SNAST Workshop Session II Session Chair: Panagiotis Karampelas |

SNAA Workshop Session II Session Chair: Piotr Bródka |

Posters Madness Session Session Chair: Mohammad Tayebi |

Tutorial II. Part II. 14:00 – 15:30 Tutorial III. Part I. 15:30 – 16:00 |

| 16:00-16:30 | |||||

| 16:30 –18:30 | MSNDS Workshop Session II Session Chair: Shih-Hung Wu |

PhD Track Session Session Chair: Tansel Ozyer |

Tutorial III. Part II. | ||

| 19:30 – 22:00 | |||||

| Time | Tutorial Title | Instructor |

|---|---|---|

| 8:30-10:30, and 11:00–11:30 | Tutorial I: Knowledge Discovery in the social network era: An overview of (big) data analytics approaches for social networks | Elio Masciari, University Federico II of Naples, Italy |

| 11:30–12:30 and 14:00–15:30 | Tutorial II: Introduction to Social Network Analysis with NodeXL | Marc A.Smith, Social Media Research Foundation,Redwood City, CA |

| 15:30–16:00 and 16:30–18:30 | Tutorial III: Using RAPIDS for Accelerated Social Network Analysis | Bradley Rees and Corey Nolet, RAPIDS cuML Sr Researcher, NVIDIA, San Jose, CA |

| Hours | Pinnacle I | Pinnacle II | Pinnacle III | Shaughnessy I | Shaughnessy II |

|---|---|---|---|---|---|

| 07:30 | Registration - (in the foyer outside of the Pinnacle Ballroom) | ||||

| 09:00 - 09:30 | Opening Session for ASONAM - (Pinnacle Ballroom) | ||||

| 09:30 - 10:30 | Keynote I Graphons and Machine Learning: Modeling and Estimation of Sparse Networks at Scale Jennifer Tour Chayes - Microsoft Research, Massachusetts, USA Chair: Wei Chen - (Pinnacle Ballroom) |

||||

| 10:30-11:00 | |||||

| 11:00 - 12:30 | 1A- Communities I Session Chair: Michele Coscia |

1B- Misbehavior & Misinformation I Session Chair: Rahul Pandey |

1C- Network Embedding Session Chair: Tyler Derr |

Industrial Track Session I Session Chair: Neil Shah |

FOSINT-SI Session I Session Chair: Mohammad Tayebi |

| 12:30-14:00 | |||||

| 14:00-16:00 | 2A- Information & Influence Diffusion I Session Chair: Elio Masciari |

2B- Misbehavior & Misinformation II Session Chair: Roy Ka-Wei Lee |

2C- Network Analysis with Machine Learning I Session Chair: Ashok Srinivasan |

Industrial Track Session II Session Chair: Konstantinos Xylogiannopoulos |

FOSINT-SI Session II Session Chair: Uwe Glässer |

| 16:00-16:30 | |||||

| 16:30 - 18:00 | Panel - (Pinnacle Ballroom) | ||||

| Hours | Pinnacle I | Pinnacle II | Pinnacle III | Shaughnessy I | Shaughnessy II |

|---|---|---|---|---|---|

| 07:30 | Registration - (in the foyer outside of the Pinnacle Ballroom) | ||||

| 9:30-10:30 | Keynote II: Friendship Paradox and Information Bias in Networks Kristina Lerman - University of Southern California, USA Chair: Francesca Spezzano - (Pinnacle Ballroom) |

||||

| 10:30-11:00 | |||||

| 11:00-12:30 | 3A - Communities II Session Chair: Xiaokui Xiao |

3B - Social Media Analysis Session Chair: Sumeet Kumar |

3C - Network Analysis with Machine Learning II Session Chair: Rahul Pandey |

FAB- Session I Session Chair: Jon Rokne |

FOSINT-SI Session III Session Chair: Francesca Spezzano |

| 12:30-14:00 | |||||

| 14:00 - 16:00 | 4A - Information & Influence Diffusion II Session Chair: Andrea Tagarelli |

4B - Elections and Politics Session Chair: Dimitris Spiliotopoulos |

4C - Network Analysis I Session Chair: Vachik Dave |

FAB- Session II Session Chair: Panagiotis Karampelas |

FOSINT-SI Session IV Session Chair: Andrew Park |

| 16:00-16:30 | |||||

| 16:30 - 18:00 | 5A - Recommendations Session Chair: Chang-Tien Lu |

5B - Applications I Session Chair: Ashok Srinivasan |

5C - Behavioral Modeling Session Chair: Ninareh Mehrabi |

FAB- Session III Session Chair: Ismail Toroslu |

Multidisciplinary- Session I Session Chair: Jalal Kawash |

| 19:30—22:00 | Conference Dinner - (Pinnacle Ballroom) | ||||

| Hours | Pinnacle I | Pinnacle II | Pinnacle III | Shaughnessy I | Shaughnessy II |

|---|---|---|---|---|---|

| 07:30 | Registration - (in the foyer outside of the Pinnacle Ballroom) | ||||

| 09:30 - 10:30 | Keynote III: Graph Neural Networks and Applications Jie Tang - Tsinghua University, China Chair: Xiaokui Xiao - (Pinnacle Ballroom) |

||||

| 10:30-11:00 | |||||

| 11:00 - 12:30 | 6A - Network Algorithms Session Chair: Tyler Derr |

6B - Network Modeling Session Chair: Vanessa Cedeno-Mieles |

6C - Misinformation & Online Content Session Chair: Francesca Spezzano |

HI-BI-BI Session I Session Chair: Peter Peng |

Multidisciplinary- Session II Session Chair: Panagiotis Karampelas |

| 12:30 - 14:00 | Lunch - (Pinnacle Ballroom) | ||||

| 14:00 - 16:00 | 7A - Modeling & Algorithms Session Chair: Peng Ni |

7B - Applications II Session Chair: Daniel Zhang |

7C - Network Analysis II Session Chair: Wei Chen |

HI-BI-BI Session II Session Chair: Tansel Ozyer |

Multidisciplinary- Session III Session Chair: Min-Yuh Day |

| 16:10 - 16:30 | Farewell - (Pinnacle Ballroom) | ||||

Social Influence in Our Society

|

Moderator: |

PhD Track Session (16:30-18:30)

|

Johanna M. Werz, Valerie Varney and Ingrid Isenhardt |

The curse of self-presentation: Looking for career patterns in online CVs |

16:30-16:55 |

|

Henry Dambanemuya and Agnes Horvat |

Network-Aware Multi-Agent Simulations of Herder-Farmer Conflicts |

16:55-17:20 |

|

Pallavi Jain, Robert Ross and Bianca Schoen Phelan |

Estimating Distributed Representation Performance in Disaster-Related Social Media Classification |

17:20-17:45 |

|

Renny Márquez, Richard Weber and André C.P.L.F. de Carvalho |

17:45-18:10 |

|

|

Christian Zingg, Giona Casiraghi, Giacomo Vaccario and Frank Schweitzer |

18:10-18:35 |

Industrial Track Session I (11:00-12:30)

|

Ankit Kumar Saw |

11:00-11:25 |

|

|

John Piorkowski, Ian McCulloh |

Examining MOOC superposter behavior using social network analysis |

11:25-11:50 |

|

Alireza Pourali, Fattane Zarrinkalam, Ebrahim Bagheri |

Neural Embedding Features for Point-of-Interest Recommendation |

11:50-12:15 |

Industrial Track Session I (14:00-16:00)

|

Shreya Jain, Dipankar Niranjan, Hemank Lamba, Neil Shah, Ponnurangam Kumaraguru |

14:00-14:25 |

|

|

Shin-Ying Huang, Yen-Wen Huang, Ching-Hao Mao |

A multi-channel cybersecurity news and threat intelligent engine - SecBuzzer |

14:25-14:50 |

|

Yang Zhang, Xianggyu Dong, Daniel Zhang, Dong Wang |

A Syntax-based Learning Approach to Geo-locating Abnormal Traffic Events using Social Sensing |

14:50-15:15 |

|

Yingtong Dou and Philip Yu |

15:15-15:40 |

FAB Session I (11:00-12:30)

|

Carson Leung |

Full Paper |

|

|

Konstantinos Xylogiannopoulos, Panagiotis Karampelas and Reda Alhajj |

Full Paper |

|

|

Sirui Sun, Bin Wu, Zixing Zhang, Nianwen Ning and Bai Wang |

A Hierarchical Insurance Recommendation Framework Using GraphOLAM Approach |

Full Paper |

|

Ahmet Anıl Müngen, Emre Doğan and Mehmet Kaya |

Short Paper |

FAB Session II (14:00-16:00)

|

Emanuela Todeva, David Knoke and Donka Keskinova |

Multi-Stage Clustering with Complementary Structural Analysis of 2-Mode Networks |

Full Paper |

|

Tung Nguyen, Li Zhang and Aron Culotta |

Estimating Tie Strength in Follower Networks to Measure Brand Perceptions |

Full Paper |

|

Mahendra Piraveenan, Sheung Yat Law and Dharshana Kasthirirathne |

Full Paper |

|

|

Rich Takacs and Ian Mcculloh |

Dormant Bots in Social Media: Twitter and the 2018 U.S. Senate Election |

Full Paper |

|

Konstantinos Xylogiannopoulos |

Exhaustive Exact String Matching: The Analysis of the Full Human Genome |

Full Paper |

FAB Session III (16:30-18:00)

|

Ahmet Engin Bayrak and Faruk Polat |

Full Paper |

|

|

Lihi Idan and Joan Feigenbaum |

Full Paper |

|

|

Esen Tutaysalgir, Pinar Karagoz and Ismail Toroslu |

Full Paper |

|

|

Sharon Grubner, Ian McCulloh and John Piorkowski |

Social Media as a Main Source of Customer Feedback – Alternative to Customer Satisfaction Surveys |

Short Paper |

FOSINT-SI Session I (11:00-12:30)

|

Zoheb Borbora, Arpita Chandra, Ponnurangam Kumaraguru and Jaideep Srivastava |

Regular Paper |

|

|

Tor Berglind, Lisa Kaati and Bjorn Pelzer |

Regular Paper |

|

|

Anu Shrestha and Francesca Spezzano |

Short Paper |

|

|

Justin Song, Valerie Spicer, Andrew Park, Herbert H. Tsang and Patricia L. Brantingham |

Short Paper |

FOSINT-SI Session II (14:00-16:00)

|

David Skillicorn, Queen`s University, Canada |

FOSNIT-SI Keynote (14:00-15:00) |

|

|

Vivin Paliath and Paulo Shakarian |

Best Paper Candidate |

|

|

Shao-Fang Wen, Mazaher Kianpour and Stewart Kowalski |

An Empirical Study of Security Culture in Open Source Software Communities |

Best Paper Candidate |

FOSINT-SI Session III (11:00-12:30)

|

Avishek Bose, Vahid Behzadan, Carlos Aguirre and William Hsu |

Regular Paper |

|

|

Aditya Pingle, Aritran Piplai, Sudip Mittal, Anupam Joshi, James Holt and Richard Zak |

Regular Paper |

|

|

Konstantinos Xylogiannopoulos, Panagiotis Karampelas, Reda Alhajj |

Text Mining for Malware Classification Using Multivariate All Repeated Patterns Detection |

Regular Paper |

FOSINT-SI Session IV (14:00-16:00)

|

Mohammed Almukaynizi, Malay Shah and Paulo Shakarian |

A Hybrid KRR-ML Approach to Predict Malicious Email Campaigns |

Short Paper |

|

Mohammed Rashed, John Piorkowski and Ian Mcculloh |

Evaluation of Extremist Cohesion in a Darknet Forum Using ERGM and LDA |

Short Paper |

|

João Evangelista, Domingos Napolitano, Márcio Romero and Renato Sassi |

Short Paper |

|

|

Emily Alfs, Doina Caragea, Dewan Chaulagain, Sankardas Roy, Nathan Albin and Pietro Poggi-Corradini |

Short Paper |

|

|

Choukri Djellali, Mehdi Adda and Mohamed Tarik Moutacalli |

Short Paper |

READNet Workshop Session I (08:30-10:30)

|

Workshop Opening |

Chairs: Andrea De Salve, Michele Coscia |

8:30-8:45 |

|

Keynote Speaker: Michele Coscia |

8:45-9:40 |

|

|

Meisam Hejazinia, Pavlos Mitsoulis-Ntompos and Serena Zhang |

9:40-10:05 |

|

|

Mariella Bonomo, Gaspare Ciaccio, Andrea De Salve and Simona E. Rombo |

10:05-10:30 |

READNet Workshop Session II (11:00-12:30)

|

Dionisis Margaris, Dimitris Spiliotopoulos and Costas Vassilakis |

11:00-11:25 |

|

|

Carmela Comito |

11:25-11:50 |

|

|

Gianluca Lax and Antonia Russo |

11:50-12:15 |

|

|

|

Workshop Closure |

12:15 |

Demo's Track Session (11:00-12:30)

|

Adewale Obadimu, Muhammad Nihal Hussain and Nitin Agarwal |

11:00-11:15 |

|

|

Mayank Kejriwal and Peilin Zhou |

11:15-11:30 |

|

|

Thomas Marcoux, Nitin Agarwal, Adewale Obadimu and Nihal Hussain |

11:30-11:45 |

|

|

Trang Ha, Quyen Hoang and Kyumin Lee |

11:45-12:00 |

|

|

Ying Zhao, Charles C. Zhou and Sihui Huang |

12:00-12:15 |

|

|

Zizhen Chen and David Matula |

12:15-12:30 |

Tutorial I (Part I. 08:30 – 10:30; Part II 11:00 –11:30)

|

authors |

title |

Tutorial II (Part I. 11:30 – 12:30; Part II 14:00 – 15:30)

|

authors |

title |

Tutorial III (Part I. 15:30 – 16:00; Part II 16:30 –18:30)

|

authors |

title |

MSNDS Workshop Session II (14:00-16:00)

|

Patryk Pazura : West Pomeranian University of Technology, Szczecin; Jaroslaw Jankowski : West Pomeranian University of Technology |

14:00-14:25 |

|

|

Li Chen Cheng : National Taipei University of Technology; Song-Lin Tsai : Soochow University |

Deep Learning for Automated Sentiment Analysis of Social Media |

14:25-14:50 |

|

Takayasu Fushimi : Tokyo University of Technology; Kenichi Kanno : Tokyo University of Technology |

14:50-15:15 |

|

|

Shih-Hung Wu : Chaoyang University of Technology; Jun-Wei Wang : Chaoyang University of Technology |

Integrating Neural and Syntactic Features on the Helpfulness Analysis of the Online Customer Reviews |

15:15-15:40 |

MSNDS Workshop Session I (16:30-18:00)

|

K. (Lynn) Putman: LIACS, Leiden University; Hanjo D. Boekhout: LIACS, Leiden University; Frank W. Takes: LIACS, Leiden University |

Fast Incremental Computation of Harmonic Closeness Centrality in Directed Weighted Networks |

16:30-16:55 |

|

Min-Yuh Day: Tamkang University; Jian-Ting Lin: Tamkang University |

Artificial Intelligence for ETF Market Prediction and Portfolio Optimization |

16:55-17:20 |

|

Logan Praznik: Brandon University, Brandon, Canada ; Gautam Srivastava: Brandon University, Brandon, Canada; Chetan Mendhe: Lakehead University, Thunder Bay, Canada ; Vijay Mago: Lakehead University, Thunder Bay, Canada |

Vertex-Weighted Measures for Link Prediction in Hashtag Graphs |

17:20-17:45 |

|

Gui-Ru Li : National Central University; Chia-Hui Chang : National Central University |

Semantic Role Labeling for Opinion Target Extraction from Chinese Social Network |

17:45-18:10 |

SNAST Workshop Session I (11:00-12:30)

|

Opening Remarks |

Thirimachos Bourlai |

11:00 - 11:10 |

|

Lingwei Chen, Shifu Hou, Yanfang Ye, Thirimachos Bourlai, Shouhuai Xu and Liang Zhao |

iTrustSO: An Intelligent System for Automatic Detection of Insecure Code Snippets in Stack Overflow |

11:10 - 11:40 |

|

Dimitrios Lappas, Panagiotis Karampelas and George Fessakis |

The role of social media surveillance in search and rescue missions |

11:40 - 12:10 |

|

Sho Tsugawa and Sumaru Niida |

The Impact of Social Network Structure on the Growth and Survival of Online Communities |

12:10 - 12:40 |

SNAST Workshop Session II (14:00-16:00)

|

Jacob Rose and Thirimachos Bourlai |

Deep Learning Based Estimation of Facial Attributes on Challenging Mobile Phone Face Datasets |

14:00-14:30 |

|

Dimitris Spiliotopoulos, Costas Vassilakis and Dionisis Margaris |

Data-driven Country Safety Monitoring Terrorist Attack Prediction |

14:30-15:00 |

|

Kaustav Basu and Arun Sen |

On Augmented Identifying Codes for Monitoring Drug Trafficking Organizations |

15:00-15:30 |

|

Suha Reddy Mokalla and Thirimachos Bourlai |

On Designing MWIR and Visible Band based DeepFace Detection Models |

15:30-16:00 |

Posters Madness Session (14:00-16:00)

|

Chung-Chi Chen, Hen-Hsen Huang and Hsin-Hsi Chen |

Next Cashtag Prediction on Social Trading Platforms with Auxiliary Tasks |

Poster |

|

David Spence, Christopher Inskip, Novi Quadrianto and David Weir |

Poster |

|

|

Dipanjyoti Paul, Rahul Kumar, Sriparna Saha and Jimson Mathew |

Online Feature Selection for Multi-label Classification in Multi-objective Optimization Framework |

Poster |

|

Katchaguy Areekijseree, Yuzhe Tang and Sucheta Soundarajan |

Poster |

|

|

Liang Feng, Qianchuan Zhao and Cangqi Zhou |

An Efficient Method to Find Communities in K-partite Networks |

Poster |

|

Maryam Ramezani, Mina Rafiei, Soroush Omranpour and Hamid R. Rabiee |

Poster |

|

|

Masaomi Kimura |

CAB-NC: The Correspondence Analysis Based Network Clustering Method |

Poster |

|

Meysam Ghaffari, Ashok Srinivasan and Xiuwen Liu |

High-resolution home location prediction from tweets using deep learning with dynamic structure |

Poster |

|

Parham Hamouni, Taraneh Khazaei and Ehsan Amjadian |

TF-MF: Improving Multiview Representation for Twitter User Geolocation Prediction |

Poster |

|

Shalini Priya, Saharsh Singh, Sourav Kumar Dandapat, Kripabandhu Ghosh and Joydeep Chandra |

Identifying Infrastructure Damage during Earthquake using Deep Active Learning |

Poster |

|

Taha Hassan, Bob Edmison, Larry Cox, Matthew Louvet and Daron Williams |

Exploring the Context of Course Rankings on Online Academic Forums |

Poster |

|

Vivek Singh and Connor Hofenbitzer |

Fairness across Network Positions in Cyberbullying Detection Algorithms |

Poster |

|

Zhou Yang, Long Nguyen and Fang Jin |

Poster |

|

|

Adrien Benamira, Benjamin Devillers, Etienne Lesot, Ayush K. Rai, Manal Saadi and Fragkiskos Malliaros |

Semi-Supervised Learning and Graph Neural Networks for Fake News Detection |

Poster |

SNAA Workshop Session I (11:00 –12:30)

|

|

11:00-11:10 |

|

|

Christopher Yong, Charalampos Chelmis, Wonhyung Lee and Daphney-Stavroula Zois |

Understanding Online Civic Engagement: A Multi-Neighborhood Study of SeeClickFix |

11:10-11:35 |

|

Charalampos Chelmis, Mengfan Yao and Wonhyung Lee |

Web and Society: A First Look into the Network of Human Service Providers |

11:35-12:00 |

|

Do Yeon Kim, Xiaohang Li, Sheng Wang, Yunying Zhuo and Roy Ka-Wei Lee |

Topic Enhanced Word Embedding for Toxic Content Detection in Q&A Sites |

12:00-12:25 |

SNAA Workshop Session II (14:00 –15:30)

|

Arpita Chandra, Zoheb Borbora, Ponnurangam Kumaraguru and Jaideep Srivastava |

Finding Your Social Space: Empirical Study of Social Exploration in Multiplayer Online Games |

14:00-14:25 |

|

Abu Saleh Md. Tayeen, Abderrahmen Mtibaa and Satyajayant Misra |

14:25-14:50 |

|

|

Sandra Mitrovic, Laurent Lecoutere and Jochen De Weerdt |

A Comparison of Methods for Link Sign Prediction with Signed Network Embeddings |

14:50-15:15 |

|

|

15:15-15:30 |

|

SI Workshop Session (9:00-10:30)

|

Jan Hauffa, Wolfgang Bräu and Georg Groh |

Detection of Topical Influence in Social Networks via Granger-Causal Inference: A Twitter Case Study |

9:00-9:30 |

|

Sukankana Chakraborty, Sebastian Stein, Markus Brede, Ananthram Swami, Geeth de Mel and Valerio Restocchi |

9:30-10:00 |

|

|

Mihai Valentin Avram, Shubhanshu Mishra, Nikolaus Nova Parulian and Jana Diesner |

Adversarial perturbations to manipulate the perception of power and influence in networks |

10:00-10:30 |

HI-BI-BI Symposium Session I (11:00-12:30)

|

Joseph De Guia, Madhavi Devaraj and Carson Leung |

DeepGx: Deep Learning Using Gene Expression for Cancer Classification |

Full Paper |

|

Hanane Grissette and El Habib Nfaoui |

Full Paper |

|

|

Hüseyin Vural, Mehmet Kaya and Reda Alhajj |

Short Paper |

|

|

Carmela Comito, Agostino Forestiero and Giuseppe Papuzzo |

A clinical decision support framework for automatic disease diagnoses |

Short Paper |

HI-BI-BI Symposium Session II (14:00-16:00)

|

Krunal Dhiraj Patel, Andrew Heppner, Gautam Srivastava and Vijay Mago |

Full Paper |

|

|

Anu Shrestha and Francesca Spezzano |

Full Paper |

|

|

Farahnaz Golrooy Motlagh, Saeede Shekarpoor Shekarpour, Amit Sheth, Thirunarayan Krishnaprasad and Michael L. Raymer |

Predicting Public Opinion on Drug Legalization: Social Media Analysis and Consumption Trends |

Full Paper |

|

Hankyu Jang, Samuel Justice, Philip M. Polgreen, Alberto M. Segre, Daniel K. Sewell and Sriram V. Pemmaraju |

Evaluating Architectural Changes to Alter Pathogen Dynamics in a Dialysis Unit |

Full Paper |

Multidisciplinary Track Session III (14:00-16:00)

|

Soumajyoti Sarkar, Paulo Shakarian, Mika Armenta, Danielle Sanchez and Kiran Lakkaraju |

Can social influence be exploited to compromise security: An online experimental evaluation |

14:00-14:20 |

|

Dionisios Soiropoulos, Ifigeneia Georgoula and Christos Bilanakos |

Optimal Influence Strategies in an Oligopolistic Competition Network Environment |

14:20-14:40 |

|

Arunkumar Bagavathi, Pedram Bashiri, Shannon Reid, Matthew Phillips and Siddharth Krishnan |

Examining Untempered Social Media: Analyzing Cascades of Polarized Conversations |

14:40-15:00 |

|

Fernando Henrique Calderon Alvarado, Li-Kai Cheng, Ming-Jen Lin, Yen Hao Huang and Yi-Shin Chen |

Content-Based Echo Chamber Detection on Social Media Platforms |

15:00-15:20 |

|

Mattia Gasparini, Giorgia Ramponi, Marco Brambilla and Stefano Ceri |

15:20-15:40 |

|

|

Shuo Zhang and Mayank Kejriwal |

Concept Drift in Bias and Sensationalism Detection: An Experimental Study |

15:40-16:00 |

Multidisciplinary Track Session II (11:00-12:30)

|

Maria Camila Rivera and Subrata Acharya |

FastestER: A Web Application to enable effective Emergency Department Service |

11:00-11:20 |

|

Abigail Garrett and Naeemul Hassan |

Understanding the Silence of Sexual Harassment Victims Through the #WhyIDidntReport Movement |

11:20-11:40 |

|

Naeemul Hassan, Manash Kumar Mandal, Mansurul Bhuiyan, Aparna Moitra and Syed Ishtiaque Ahmed |

Can Women Break the Glass Ceiling?: An Analysis of #MeToo Hashtagged Posts on Twitter |

11:40-12:00 |

|

Roland Molontay and Marcell Nagy |

Two Decades of Network Science - as seen through the co-authorship network of network scientists |

12:00-12:20 |

|

Apratim Das, Alex Aravind and Mark Dale |

12:20-12:40 |

Multidisciplinary Track Session I (16:30-18:00)

|

Apratim Das, Mike Drakos, Alex Aravind and Darwin Horning |

16:30-16:50 |

|

|

Michelle Bowman and Subrata Acharya |

16:50-17:10 |

|

|

Meysam Ghaffari, Ashok Srinivasan, Anuj Mubayi, Xiuwen Liu and Krishnan Viswanathan |

Next-Generation High-Resolution Vector-Borne Disease Risk Assessment |

17:10-17:30 |

|

Dany Perwita Sari and Yun-Shang Chiou |

Transformation in Architecture and Spatial Organization at Javanese house |

17:30-17:50 |

|

Marcell Nagy and Roland Molontay |

17:50-18:10 |

Session 1A - Communities I (11:00-12:30)

|

Michele Coscia |

Long Paper 11:00-11:30 |

|

|

Neda Zarayeneh and Ananth Kalyanaraman |

A Fast and Efficient Incremental Approach toward Dynamic Community Detection |

Long Paper 11:30-12:00 |

|

Young D. Kwon, Reza Hadi Mogavi, Ehsan Ul Haq, Youngjin Kwon, Xiaojuan Ma and Pan Hui |

Effects of Ego Networks and Communities on Self-Disclosure in an Online Social Network |

Long Paper 12:00-12:30 |

|

Courtland Vandam, Farzan Masrour, Pang-Ning Tan and Tyler Wilson |

You have been CAUTE! Early Detection of Compromised Accounts on Social Media |

Long Paper 11:00-11:30 |

|

Thai Le, Kai Shu, Maria D. Molina, Dongwon Lee, S. Shyam Sundar and Huan Liu |

Long Paper 11:30-12:00 |

|

|

Limeng Cui, Suhang Wang and Dongwon Lee |

SAME: Sentiment-Aware Multi-Modal Embedding for Detecting Fake News |

Long Paper 12:00-12:30 |

|

Jundong Li, Liang Wu, Ruocheng Guo, Chenghao Liu and Huan Liu |

Multi-Level Network Embedding with Boosted Low-Rank Matrix Approximation |

Long Paper 11:00-11:30 |

|

Aynaz Taheri and Tanya Berger-Wolf |

Long Paper 11:30-12:00 |

|

|

Benedek Rozemberczki, Ryan Davies, Rik Sarkar and Charles Sutton |

Long Paper 12:00-12:30 |

Session 2A - Information & Influence Diffusion I (14:00-16:00)

|

Antoine Tixier, Maria Rossi, Fragkiskos Malliaros and Jesse Read |

Perturb and Combine to Identify Influential Spreaders in Real-World Networks |

Long Paper 14:00-14:30 |

|

Yang Chen and Jiamou Liu |

Becoming Gatekeepers Together with Allies: Collaborative Brokerage over Social Networks |

Long Paper 14:30-15:00 |

|

Yu Zhang |

Diversifying Seeds and Audience in Social Influence Maximization |

Short Paper 15:00-15:20 |

|

Arash Ghayoori and Rakesh Nagi |

Seed Investment Bounds for Viral Marketing under Generalized Diffusion |

Short Paper 15:20-15:40 |

|

Xiao Yang, Seungbae Kim and Yizhou Sun |

How Do Influencers Mention Brands in Social Media? Sponsorship Prediction of Instagram Posts |

Short Paper 15:40-16:00 |

Session 2B - Misbehavior & Misinformation II (14:00-16:00)

|

Sooji Han, Jie Gao and Fabio Ciravegna |

Neural Language Model Based Training Data Augmentation for Weakly Supervised Early Rumor Detection |

Long Paper 14:00-14:30 |

|

Amir Pouran Ben Veyseh, My T. Thai, Thien Huu Nguyen and Dejing Dou |

Rumor Detection in Social Networks via Deep Contextual Modeling |

Long Paper 14:30-15:00 |

|

Wataru Kudo, Mao Nishiguchi and Fujio Toriumi |

Fraudulent User Detection on Rating Networks Based on Expanded Balance Theory and GCNs |

Short Paper 15:00-15:20 |

|

Udit Arora, William Scott Pakka and Tanmoy Chakraborty |

Short Paper 15:20-15:40 |

|

|

Mohammad Raihanul Islam, Sathappan Muthiah and Naren Ramakrishnan |

RumorSleuth: Joint Detection of Rumor Veracity and User Stance |

Short Paper 15:40-16:00 |

Session 2C - Network Analysis with Machine Learning I (14:00-16:00)

|

Aravind Sankar, Xinyang Zhang and Kevin Chang |

Long Paper 14:00-14:30 |

|

|

Suhansanu Kumar, Heting Gao, Changyu Wang, Hari Sundaram and Kevin Chang |

Hierarchical Multi-Armed Bandits for Discovering Hidden Populations |

Long Paper 14:30-15:00 |

|

Yang Zhang, Hongxiao Wang, Daniel Zhang, Yiwen Lu and Dong Wang |

RiskCast: Social Sensing based Traffic Risk Forecasting via Inductive Multi-View Learning |

Short Paper 15:00-15:20 |

|

Sumeet Kumar and Kathleen M. Carley |

Short Paper 15:20-15:40 |

|

|

Renhao Cui, Gagan Agrawal and Rajiv Ramnath |

Tweets Can Tell: Activity Recognition using Hybrid Long Short-Term Memory Model |

Short Paper 15:40-16:00 |

Session 3A - Communities II (11:00-12:30)

|

Yulong Pei, George Fletcher and Mykola Pechenizkiy |

Long Paper 11:00-11:30 |

|

|

Jean Marie Tshimula, Belkacem Chikhaoui and Shengrui Wang |

HAR-search: A Method to Discover Hidden Affinity Relationships in Online Communities |

Long Paper 11:30-12:00 |

|

Domenico Mandaglio and Andrea Tagarelli |

Dynamic Consensus Community Detection and Combinatorial Multi-Armed Bandit |

Short Paper 12:00-12:20 |

Session 3B - Social Media Analysis (11:00-12:20)

|

Marija Stanojevic, Jumanah Alshehri and Zoran Obradovic |

Surveying public opinion using label prediction on social media data |

Long Paper 11:00-11:30 |

|

Taoran Ji, Xuchao Zhang, Nathan Self, Kaiqun Fu, Chang-Tien Lu and Naren Ramakrishnan |

Feature Driven Learning Framework for Cybersecurity Event Detection |

Long Paper 11:30-12:00 |

|

Virgile Landeiro and Aron Culotta |

Collecting Representative Social Media Samples from a Search Engine by Adaptive Query Generation</span> |

Short Paper 12:00-12:20 |

Session 3C - Network Analysis with Machine Learning II (11:00-12:20)

|

Mahsa Ghorbani, Mahdieh Soleymani Baghshah and Hamid R. Rabiee |

MGCN: Semi-supervised Classification in Multi-layer Graphs withGraph Convolutional Networks |

Short Paper 11:00-11:20 |

|

Vachik Dave, Baichuan Zhang, Pin-Yu Chen and Mohammad Hasan |

Neural-Brane: An inductive approach for attributed network embedding |

Short Paper 11:20-11:40 |

|

Kun Tu, Jian Li, Don Towsley, Dave Braines and Liam Turner |

gl2vec: Learning Feature Representation Using Graphlets for Directed Networks |

Short Paper 11:40-12:00 |

|

Caleb Belth, Fahad Kamran, Donna Tjandra and Danai Koutra |

When to Remember Where You Came from: Node Representation Learning in Higher-order Networks |

Short Paper 12:00-12:20 |

|

Soumajyoti Sarkar, Ashkan Aleali, Paulo Shakarian, Mika Armenta, Danielle Sanchez and Kiran Lakkaraju |

Impact of Social Influence on Adoption Behavior: An Online Controlled Experimental Evaluation |

Long Paper 14:00-14:30 |

|

Thiago Silva, Alberto Laender and Pedro Vaz de Melo |

Characterizing Knowledge-Transfer Relationships in Dynamic Attributed Networks |

Long Paper 14:30-15:00 |

|

Emily Fischer, Souvik Ghosh and Gennady Samorodnitsky |

Long Paper 15:00-15:30 |

|

|

Jianjun Luo, Xinyue Liu and Xiangnan Kong |

Long Paper 15:30-16:00 |

Session 4B - Elections and Politics (14:00-15:50)

|

Huyen Le, Bob Boynton, Zubair Shafiq and Padmini Srinivasan |

A Postmortem of Suspended Twitter Accounts in the 2016 U.S. Presidential Election |

Long Paper 14:00-14:30 |

|

Hamid Karimi, Tyler Derr, Aaron Brookhouse and Jiliang Tang |

Long Paper 14:30-15:00 |

|

|

Indu Manickam, Andrew Lan, Gautam Dasarthy and Richard Baraniuk |

Tracing Political Ideology on Twitter During the 2016 U.S. Presidential Election |

Long Paper 15:00-15:30 |

|

Alexandru Topirceanu and Radu-Emil Precup |

A Novel Methodology for Improving Election Poll Prediction Using Time-Aware Polling |

Short Paper 15:30-15:50 |

|

Michele Coscia and Luca Rossi |

The Impact of Projection and Backboning on Network Topologies |

Long Paper 14:00-14:30 |

|

Katchaguy Areekijseree and Sucheta Soundarajan |

Long Paper 14:30-15:00 |

|

|

Xiuwen Zheng and Amarnath Gupta |

Short Paper 15:00-15:20 |

|

|

Laurence Brandenberger, Giona Casiraghi, Vahan Nanumyan and Frank Schweitzer |

Short Paper 15:20-15:40 |

|

|

Seyed Amin Mirlohi Falavarjani, Ebrahim Bagheri, Jelena Jovanovic and Ali A. Ghorbani |

On the Causal Relation between Users' Real-World Activities and their Affective Processes |

Short Paper 15:40-16:00 |

Session 5A - Recommendations (16:30-18:00)

|

Chia-Wei Chen, Sheng-Chuan Chou, Chang-You Tai and Lun-Wei Ku |

PGA: Phrase-Guided Attention Web Article Recommendation for Next Clicks and Views |

Long Paper 16:30-17:00 |

|

Deqing Yang, Ziyi Wang, Junyang Jiang and Yanghua Xiao |

Knowledge Embedding towards the Recommendation with Sparse User-item Interactions |

Long Paper 17:00-17:30 |

|

Daniel Zhang, Bo Ni, Qiyu Zhi, Thomas Plummer, Qi Li, Hao Zheng, Qingkai Zeng, Yang Zhang and Dong Wang |

Through The Eyes of A Poet: Classical Poetry Recommendation with Visual Input on Social Media |

Long Paper 17:30-18:00 |

Session 5B - Applications I (16:30-18:00)

|

Henry Dambanemuya, Madhav Joshi and Ágnes Horvát |

Network Perspective on the Efficiency of Peace Accords Implementation |

Long Paper 16:30-17:00 |

|

Pradyumna Prakhar Sinha, Rohan Mishra, Ramit Sawhney and Rajiv Ratn Shah |

ASASNet - Exploiting Linguistic Homophily for Suicidal Ideation Detection in Social Media |

Short Paper 17:00-17:20 |

|

Sreeja Nair, Adriana Iamnitchi and John Skvoretz |

Promoting Social Conventions across Polarized Networks: An Empirical Study |

Short Paper 17:20-17:40 |

|

Mayank Kejriwal and Peilin Zhou |

Low-supervision urgency detection and transfer in short crisis messages |

Short Paper 17:40-18:00 |

Session 5C - Behavioral Modeling (16:30-17:50)

|

Vanessa Cedeno-Mieles, Zhihao Hu, Yihui Ren, Xinwei Deng, Abhijin Adiga, Christopher Barrett, Saliya Ekanayake, Gizem Korkmaz, Chris Kuhlman, Dustin Machi, Madhav Marathe, S. S. Ravi, Brian Goode, Naren Ramakrishnan, Parang Saraf, Nathan Self, Noshir Contractor, Joshua Epstein and Michael Macy |

Long Paper 16:30-17:00 |

|

|

Binxuan Huang and Kathleen Carley |

A Large-Scale Empirical Study of Geotagging Behavior on Twitter |

Long Paper 17:00-17:30 |

|

Rahul Pandey, Carlos Castillo and Hemant Purohit |

Modeling Human Annotation Errors to Design Bias-Aware Systems for Social Stream Processing |

Short Paper 17:30-17:50 |

|

Peng Ni, Masatoshi Hanai, Wen Jun Tan and Wentong Cai |

Efficient Closeness Centrality Computation in Time-Evolving Graphs |

Long Paper 11:00-11:30 |

|

Huda Nassar, Austin Benson and David Gleich |

Long Paper 11:30-12:00 |

|

|

Frédéric Simard |

Short Paper 12:00-12:20 |

Session 6B - Network Modeling (11:00-12:20)

|

Chen Avin, Zvi Lotker, Yinon Nahum and David Peleg |

Long Paper 11:00-11:30 |

|

|

Julian Müller and Ulrik Brandes |

Long Paper 11:30-12:00 |

|

|

Haripriya Chakraborty and Liang Zhao |

Short Paper 12:00-12:20 |

Session 6C - Misinformation & Online Content (11:00-12:20)

|

Jesper Holmström, Daniel Jonsson, Filip Polbratt, Olav Nilsson, Linnea Lundström, Sebastian Ragnarsson, Anton Forsberg, Karl Andersson and Niklas Carlsson |

Do we Read what we Share? Analyzing the Click Dynamic of News Article's Shared on Twitter |

Short Paper 11:00-11:20 |

|

Alexandre M. Sousa, Jussara M. Almeida and Flavio Figueiredo |

Analyzing and Modeling User Curiosity in Online Content Consumption: A LastFM Case Study |

Short Paper 11:20-11:40 |

|

Bhavtosh Rath, Wei Gao and Jaideep Srivastava |

Evaluating Vulnerability to Fake News in Social Networks: A Community Health Assessment Model |

Short Paper 11:40-12:00 |

|

Kai Shu, Xinyi Zhou, Suhang Wang, Reza Zafarani and Huan Liu |

Short Paper 12:00-12:20 |

Session 7A - Modeling & Algorithms (14:00-15:50)

|

Malik Magdon-Ismail and Kshiteesh Hegde |

Long Paper 14:00-14:30 |

|

|

Farzan Masrour Shalmani, Pang-Ning Tan and Abdol-Hossein Esfahanian |

OPTANE: An OPtimal Transport Algorithm for NEtwork Alignment |

Short Paper 14:30-14:50 |

|

Feiyu Long, Nianwen Ning, Chenguang Song and Bin Wu |

Short Paper 14:50-15:10 |

|

|

Sai Kiran Narayanaswami, Balaraman Ravindran and Venkatesh Ramaiyan |

Short Paper 15:10-15:30 |

|

|

Yulong Pei, Jianpeng Zhang, George Fletcher and Mykola Pechenizkiy |

Infinite Motif Stochastic Blockmodel for Role Discovery in Networks |

Short Paper 15:30-15:50 |

Session 7B - Applications II (14:00-16:00)

|

Rashid Tahir, Fareed Zaffar, Faizan Ahmad, Christo Wilson, Hammas Saeed and Shiza Ali |

Short Paper 14:00-14:20 |

|

|

Pamela Thomas, Rachel Krohn and Tim Weninger |

Dynamics of Team Library Adoptions: An Exploration of GitHub Commit Logs |

Short Paper 14:20-14:40 |

|

Lea Baumann and Sonja Utz |

Short Paper 14:40-15:00 |

|

|

Kaustav Basu and Arunabha Sen |

Monitoring Individuals in Drug Trafficking Organizations: A Social Network Analysis |

Short Paper 15:00-15:20 |

|

Victor S. Bursztyn, Larry Birnbaum and Doug Downey |

Thousands of Small, Constant Rallies: A Large-Scale Analysis of Partisan WhatsApp Groups |

Short Paper 15:20-15:40 |

|

Yo-Der Song, Benjamin Farrelly, Yiying Sun, Mingwei Gong and Aniket Mahanti |

Measurement and Analysis of an Adult Video Streaming Service |

Short Paper 15:40-16:00 |

Session 7C - Network Analysis II (14:00-16:00)

|

Mitchell Goist, Ted Hsuan Yun Chen and Christopher Boylan |

Reconstructing and Analyzing the Transnational Human Trafficking Network |

Long Paper 14:00-14:30 |

|

Pujan Paudel, Trung Nguyen and Amartya Hatua |

Long Paper 14:30-15:00 |

|

|

Ninareh Mehrabi, Fred Morstatter, Nanyun Peng and Aram Galstyan |

Debiasing Community Detection: The Importance of Lowly Connected Nodes |

Short Paper 15:00-15:20 |

|

Obaida Hanteer and Luca Rossi |

Short Paper 15:20-15:40 |

|

|

Mahboubeh Ahmadalinezhad, Masoud Makrehchi and Neil Seward |

Lineup Performance Prediction Through Network Analysis |

Short Paper 15:40-16:00 |

Abstract

|

Rating platforms provide users with useful information on products or other users. However, fake ratings are sometimes generated by fraudulent users. In this paper, we tackle the task of fraudulent user detection on rating platforms. We propose an end-to-end framework based on Graph Convolutional Networks (GCNs) and expanded balance theory, which properly incorporates both the signs and directions of edges. Experimental results on four real-world datasets show that the proposed framework performs better, or even best, in most settings. In particular, this framework shows remarkable stability in inductive settings, which is associated with the detection of new fraudulent users on rating platforms. Furthermore, using expanded balance theory, we provide new insight into the behavior of users in rating networks, that fraudulent users form a faction to deal with the negative ratings from other users. The owner of a rating platform can detect fraudulent users earlier and constantly provide users with more credible information by using the proposed framework. |

Abstract

|

Rating platforms provide users with useful information on products or other users. However, fake ratings are sometimes generated by fraudulent users. In this paper, we tackle the task of fraudulent user detection on rating platforms. We propose an end-to-end framework based on Graph Convolutional Networks (GCNs) and expanded balance theory, which properly incorporates both the signs and directions of edges. Experimental results on four real-world datasets show that the proposed framework performs better, or even best, in most settings. In particular, this framework shows remarkable stability in inductive settings, which is associated with the detection of new fraudulent users on rating platforms. Furthermore, using expanded balance theory, we provide new insight into the behavior of users in rating networks, that fraudulent users form a faction to deal with the negative ratings from other users. The owner of a rating platform can detect fraudulent users earlier and constantly provide users with more credible information by using the proposed framework. |

Abstract

|

When studying a network, it is often of interest to understand the robustness of that network to noise. Network robustness has been studied in a variety of contexts, examining network properties such as the number of connected components and the lengths of shortest paths. In this work, we present a new network robustness measure, which we refer to as `sampling robustness'. The goal of the sampling robustness measure is to quantify the extent to which a network sample collected from a graph with errors is a good representation of a network sample collected from that same graph, but without errors. These errors may be introduced by humans or by the system (e.g., mistakes from the respondents or a bug in an API program), and may affect the performance of a data collection algorithm and the quality of the obtained sample. Thus, when data analysts analyze the sampled network, they may wish to know whether such errors will affect future analysis results. We demonstrate that sampling robustness is dependent on a few, easily-computed properties of the network: the leading eigenvalue, average node degree and clustering coefficient. In addition, we introduce regression models for estimating sampling robustness given an obtained sample. As a result, our models can estimate the sampling robustness with MSE less than 0.0015 and the model has an R-squared of up to 75. |

Abstract

|

Over the past decade, network analysis plays an important role for understanding several important problems. However, when performing any analysis task, some information may be leaked or scattered among individuals who may not willing to share their information (e.g., number of individual’s friends and who they are). Secure multi-party computation (MPC) allows individuals to jointly perform any computation without revealing each individual’s input. MPC has been used a lot for distributed databases but there is only a few works on graph mining applications. Here, we present two novel secure frameworks which allow a node to securely compute its clustering coefficient, which we evaluate the trade-off between efficiency and security of several proposed instantiations. Our results show that the cost for secure computing highly depends on network structure. This work is a step towards developing the library of secure graph operations in the future. |

Abstract

|

In anagram games, players are provided with letters for forming as many words as possible over a specified time duration. Anagram games have been used in controlled experiments to study problems such as collective identity, effects of goal-setting, internal-external attributions, test anxiety, and others. The majority of work on anagram games involves individual players. Recently, work has expanded to group anagram games where players cooperate by sharing letters. In this work, we analyze experimental data from online social networked experiments of group anagram games. We develop mechanistic and data-driven models of human decision-making to predict detailed game player actions (e.g., what word to form next). With these results, we develop a composite agent-based modeling and simulation platform that incorporates the models from data analysis. We compare model predictions against experimental data, which enables us to provide explanations of human decision-making and behavior. Finally, we provide illustrative case studies using agent-based simulations to demonstrate the efficacy of models to provide insights that are beyond those from experiments alone. |

Abstract

|

In the future, analysis of social networks will conceivably move from graphs to hypergraphs. However, theory has not yet caught up with this type of data organizational structure. By introducing and analyzing a general model of preferential attachment hypergraphs, this paper makes a step towards narrowing this gap. We consider a random preferential attachment model H(p,Y) for network evolution that allows arrivals of both nodes and hyperedges of random size. At each time step t, two possible events may occur: (1) [vertex arrival event:] with probability p>0 a new vertex arrives and a new hyperedge of size Y_t, containing the new vertex and Y_t-1 existing vertices, is added to the hypergraph; or (2) [hyperedge arrival event:] with probability 1-p, a new hyperedge of size Y_t, containing Y_t existing vertices, is added to the hypergraph. In both cases, the involved existing vertices are chosen independently at random according to the preferential attachment rule, i.e., with probability proportional to their degree, where the degree of a vertex is the number of edges containing it. Assuming general restrictions on the distribution of Y_t, we prove that the H(p,Y) model generates {\em power law networks}, i.e., the expected fraction of nodes with degree k is proportional to $k^{-1-\Gamma}$, where $\Gamma=\lim_{t\rightarrow\infty}\frac{\sum_{i=0}^{t-1}E[Y_i]}{t(E[Y_t]-p)}\in (0,\infty)$. This extends the special case of preferential attachment graphs, where Y_t=2 for every t, yielding $\Gamma=2/(2-p)$. Therefore, our results show that the exponent of the degree distribution is sensitive to whether one considers the structure of a social network to be a hypergraph or a graph. We discuss, and provide examples for, the implications of these considerations. |

Abstract

|

The prediction of opinion distribution and evolution in real-world scenarios represents a major scientific challenge for current social networks analysis. Indeed, multiple prediction solutions based on statistics and economic indices have been proposed over time, but, as we better understand diffusion phenomena, we know that temporal characteristics provide even more uncertainty. As such, current literature is not yet able to define truly reliable models for the evolution of political opinion, marketing preferences, or social unrest. Inspired from micro-scale opinion dynamics, we develop an original time-aware (TA) methodology which is able to improve the prediction of opinion distribution, by modeling opinion as a function which spikes up when opinion is expressed, and slowly dampens down otherwise. After a parametric analysis, we validate our TA method on survey data from the US presidential elections of 2012 and 2016. By comparing our time-aware method (TA) with classic survey averaging (SA), and cumulative vote counting (CC), we find our method is substantially closer to the real election outcomes. On average, we measure that SA is 6.3% off, CC is 5.6% off, while TA is only 1.5% off from the final registered election outcomes; this difference translates into a 75% prediction improvement of our TA method. As our work falls in line with studies on the microscopic temporal dynamics of social networks, we find evidence of how macroscopic prediction can be improved using time-awareness. |

Abstract

|

The scarcity and class imbalance of training data are known issues in current rumor detection tasks. We propose a straight-forward and general-purpose data augmentation technique which is beneficial to early rumor detection relying on event propagation patterns. The key idea is to exploit massive unlabeled event data sets on social media to augment limited labeled rumor source tweets. This work is based on rumor spreading patterns revealed by recent rumor studies and semantic relatedness between labeled and unlabeled data. A state-of-the-art neural language model (NLM) and large credibility focused Twitter corpora are employed to learn context-sensitive representations of rumor tweets. Six different real-world events based on three publicly available rumor datasets are employed in our experiments to provide a comparative evaluation of the effectiveness of the method. The results show that our method can expand the size of an existing rumor data set nearly by 200% and corresponding social context by 100% for conversation threads (and retweets additionally) with reasonable quality. Preliminary experiments with a state-of-the-art deep learning-based rumor detection model show that augmented data can alleviate overfitting and class imbalance caused by limited train data and can help to train complex neural networks (NNs). With augmented data, the performance of rumor detection can be improved by 6.4%. Our experiments also indicate that augmented training data can help to generalize rumor detection models on unseen new rumors. |

Abstract

|

SimRank is a widely studied link-based similarity measure that is known for its simple, yet powerful philosophy that two nodes are similar if they are referenced by similar nodes. While this philosophy has been the basis of several improvements, there is another useful, albeit less frequently discussed interpretation for SimRank known as the Random Surfer-Pair Model. In this work, we show that other well known measures related to SimRank can also be reinterpreted using Random Surfer-Pair Models, and establish a mathematically sound, general and unifying framework for several link-based similarity measures. This also serves to provide new insights into their functioning and allows for using these measures in a Monte Carlo framework, which provides several computational benefits. As an illustration of its utility in designing measures, we develop a new measure based on two existing measures under this framework, and empirically demonstrate its efficacy. |

Abstract

|

Social trading platforms provide a forum for investors to share their analysis and opinions. Posts on these platforms are characterized by narrative styles which are much different from posts on general social platforms, for instance tweets. As a result, recommendation systems for social trading platforms should leverage tailor-made latent features. This paper presents a representation for these latent features in both textual data and market information. A real-world dataset is adopted to conduct experiments involving a novel task called next cashtag prediction. We propose a joint learning model with an attentive capsule network. Experimental results show positive results with the proposed methods and the corresponding auxiliary tasks. |

Abstract

|

Clickbait is an attractive yet misleading headline that lures readers to commit click-conversion. Development of robust clickbait detection models has been, however, hampered due to the shortage of high-quality labeled training samples. To overcome this challenge, we investigate how to exploit human-written and machine-generated synthetic clickbaits. We first ask crowdworkers and journalism students to generate clickbaity news headlines. Second, we utilize deep generative models to generate clickbaity headlines. Through empirical evaluations, we demonstrate that synthetic clickbaits by human entities and deep generative models are consistently useful in improving the accuracy of various prediction models, by as much as 14.5% in AUC, across two real datasets and different types of algorithms. Especially, we observe an improvement in accuracy, up to 8.5% in AUC, even for top-ranked clickbait detectors from the Clickbait Challenge 2017. Our study proposes a novel direction to address the shortage of labeled training data, one of fundamental bottlenecks in supervised learning, by means of synthetic training data with reinforced domain knowledge. It also provides a solution for distinguishing between bot-generated and human-written clickbaits, thus aiding the work of moderators and better alerting news consumers. |

Abstract

|

For trajectory data that tend to have beyond first-order (i.e., non-Markovian) dependencies, higher-order networks have been shown to accurately capture details lost with the standard aggregate network representation. At the same time, representation learning has shown success on a wide range of network tasks, removing the need to hand-craft features for these tasks. In this work, we propose a node representation learning framework called EVO or Embedding Variable Orders, which captures non-Markovian dependencies by combining work on higher-order networks with work on node embeddings. We show that EVO outperforms baselines in tasks where high-order dependencies are likely to matter, demonstrating the benefits of considering high-order dependencies in node embeddings. We also provide insights into when it does or does not help to capture these dependencies. To the best of our knowledge, this is the first work on representation learning for higher-order networks. |

Abstract

|

Role discovery and community detection in networks are two essential tasks in network analytics where the role denotes the global structural patterns of nodes in networks and the community represents the local connections of nodes in networks. Previous studies viewed these two tasks orthogonally and solved them independently while the relation between them has been totally neglected. However, it is intuitive that roles and communities in a network are correlated and complementary to each other. In this paper, we propose a novel model for simultaneous roles and communities detection (REACT) in networks. REACT uses non-negative matrix tri-factorization (NMTF) to detect roles and communities and utilizes L_{2,1} norm as the regularization to capture the diversity relation between roles and communities. The proposed model has several advantages comparing with other existing methods: (1) it incorporates the diversity relation between roles and communities to detect them simultaneously using a unified model, and (2) it provides extra information about the interaction patterns between roles and between communities using NMTF. To analyze the performance of REACT, we conduct experiments on several real-world SNs from different domains. By comparing with state-of-the-art community detection and role discovery methods, the obtained results demonstrate REACT performs best for both role and community detection tasks. Moreover, our model provides a better interpretation for the interaction patterns between communities and between roles. |

Abstract

|

Role/block discovery is an essential task in network analytics so it has attracted significant attention recently. Previous studies on role discovery either relied on first or second-order structural information to group nodes but neglected the higher-order information or required the number of roles/blocks as the input which may be unknown in practice. To overcome these limitations, in this paper we propose a novel generative model, infinite motif stochastic blockmodel (IMM), for role discovery in networks. IMM takes advantage of high-order motifs in the generative process and it is a nonparametric Bayesian model which can automatically infer the number of roles. To validate the effectiveness of IMM, we conduct experiments on synthetic and real-world networks. The obtained results demonstrate IMM outperforms other blockmodels in role discovery task. |

Abstract

|

An information broker in a social network acts as gatekeepers to control incoming information or resources to her group and decides whether or not the unconnected agents in the group have access to information or resources. In this paper, we study the problem of identifying a group of agents as information brokers in a social network. We focus on the cases that brokers hold heterogeneous influencing capabilities and put analysis on a social network with directed relationships. To this end, we define a {\em collaborative broker team} over a directed network, which provides a selection of agents with different influencing power to control the whole network. We ask that how to find a smallest collaborative broker team so that the network can be well controlled with fewer ties. By assuming that agents only own two-typed control capabilities, we investigate the fundamental case of this problem and formalize it as the {\em collaborative brokerage} problem. We show that collaborative brokerage is NP-hard generally, yet has polynomial-time optimal solutions over directed trees. We then develop efficient algorithms over arbitrary directed networks. To evaluate the algorithms, we run experiments over networks generated using well-known random graph models and real-world datasets. Experimental results show that our algorithms produce relatively good solutions with faster speed. |

Abstract

|

News and information spread over social media can have big impact on thoughts, beliefs, and opinions. It is therefore important to understand the sharing dynamics on these forums. However, most studies trying to capture these dynamics rely only on Twitter's open APIs to measure how frequently articles are shared/retweeted, and therefore do not capture how many users actually read the articles linked in these tweets. To address this problem, in this paper, we first develop a novel measurement methodology, which combines the Twitter steaming API, the Bitly API, and careful sample rate selection to simultaneously collect and analyze the timeline of both the number of retweets and clicks generated by news article links. Second, we present a temporal analysis of the news cycle based on five-day-long traces (containing both clicks and retweet over time) for the news article links discovered during a seven-day period. Among other things, our analysis highlights differences in the relative timelines observed for clicks and retweets (e.g., retweet data often lags and underestimates the bias towards reading popular links/articles), and helps answer important questions regarding differences in how age-based biases and churn affect how frequently news articles shared on Twitter are accessed over time. Our temporal findings are shown to be consistent both when comparing data collected a year apart (2017 vs 2018) and across articles published on news websites with vastly different characteristics. |

Abstract

|

Link Streams were proposed as a model of temporal networks. We seek to understand topological and temporal properties of those objects through efficiently computing the distances, latencies and lengths of shortest fastest paths. We develop different algorithms to compute those values. One purpose of this study is to help develop algorithms to compute centrality functions on link streams such as the betweenness and the closeness. |

Abstract

|

Consuming news from social media is becoming increasingly popular. Social media appeals to users due to its fast dissemination of information, low cost, and easy access. However, social media also enables the widespread of fake news. Because of the detrimental societal effects of fake news, detecting fake news has attracted increasing attention. However, the detection performance only using news contents is generally not satisfactory as fake news is written to mimic true news. Thus, there is a need for an in-depth understanding on the relationship between user profiles on social media and fake news. In this paper, we study the challenging problem of understanding and exploiting user profiles on social media for fake news detection. In an attempt to understand connections between user profiles and fake news, first, we measure users' sharing behaviors on social media and group representative users who are more likely to share fake and real news; then, we perform a comparative analysis of explicit and implicit profile features between these user groups, which reveals their potential to help differentiate fake news from real news. To exploit user profile features, we demonstrate the usefulness of these user profile features in a fake news classification task. We further validate the effectiveness of these features through feature importance analysis. The findings of this work lay the foundation for deeper exploration of user profile features of social media and enhance the capabilities for fake news detection. |

Abstract

|

Community detection and evolution has been largely studied in the last few years, especially for network systems that are inherently dynamic and undergo different types of changes in their structure and organization in communities. Because of the inherent uncertainty and dynamicity in such network systems, we argue that temporal community detection problems can profitably be solved under a particular class of multi-armed bandit problems, namely combinatorial multi-armed bandit (CMAB). More specifically, we propose a CMAB-based methodology for the novel problem of dynamic consensus community detection, i.e., to compute a single community structure that is designed to encompass the whole information available in the sequence of observed temporal snapshots of a network in order to be representative of the knowledge available from community structures at the different time steps. Unlike existing approaches, our key idea is to produce a dynamic consensus solution for a temporal network to have unique capability of embedding both long-term changes in the community formation and newly observed community structures. |

Abstract

|

Finding clusters in a network has been practically important in many applications and was studied by many researchers. Most commonly used methods are spectral clustering and Newman’s modularity maximization. However, there has been no unified view of them. In this study, we introduced a new guiding principle based on correspondence analysis to obtain nodes’ coordinates and discussed its equivalence to spectral clustering and its relationship to Newman’s modularity. |

Abstract

|

Closeness centrality is one of the key indicators for vertex importance in social network analytics. Since social networks are constantly growing, it is essential to monitor a vertex’s closeness centrality through time in order to study its trend in influential power. In this paper, we model dynamic social networks as a time-evolving graph (a sequence of graph snapshots through time) and work on the problem of computing a vertex’s closeness centrality for all graph snapshots. We propose novel algorithms that efficiently utilize graph temporal information, and give theoretical analysis on its time complexity, which is shown to be small for real-world social networks. Experiments on various real-world data sets show speedups of one to two orders of magnitude compared to existing graph topology based algorithms. Lastly, we create synthetic data sets to show how topological and temporal graph features affect the closeness centrality computation time for both existing and proposed approaches. |

Abstract

|

Heterogeneous Information Networks (HINs) comprise nodes of different types inter-connected through diverse semantic relationships. In many real-world applications, nodes in information networks are often associated with additional attributes, resulting in Attributed HINs (or AHINs). In this paper, we study semi-supervised learning (SSL) on AHINs to classify nodes based on their structure, node types and attributes, given limited supervision. Recently, Graph Convolutional Networks (GCNs) have achieved impressive results in several graph-based SSL tasks. However, they operate on homogeneous networks, while being completely agnostic to the semantics of typed nodes and relationships in real-world HINs. In this paper, we seek to bridge the gap between semantic-rich HINs and the neighborhood aggregation paradigm of graph neural networks, to generalize GCNs through metagraph semantics. We propose a novel metagraph convolution operation to extract features from local metagraph-structured neighborhoods, thus capturing semantic higher-order relationships in AHINs. Our proposed neural architecture Meta-GNN extracts features of diverse semantics by utilizing multiple metagraphs, and employs a novel metagraph-attention module to learn personalized metagraph preferences for each node. Our semi-supervised node classification experiments on multiple real-world AHIN datasets indicate significant performance gains of 6% Micro-F1 on average over state of-the-art AHIN baselines. Visualizations on metagraph attention weights yield interpretable insights into their relative task-specific importance. |

Abstract

|

Community detection in complex networks has attracted lots of interest in scientific fields. However, current community detection algorithms mainly focus on unipartite network. In this paper, we propose a new definition of $K$-partite modularity and a new method which strictly follows the idea of original Louvain algorithm. Compared with other algorithms, our method is more intuitive and easier to implement. We evaluate on both synthetic and real-world networks. Experimental results show that, our method is not only capable to obtain better partitions, but scalable to large-scale data sets. |

Abstract

|

This paper proposes a novel algorithm to discover hidden individuals in a social network. The problem is increasingly important for social scientists as the populations (e.g., individuals with mental illness) that they study converse online. Since these populations do not use the category (e.g., mental illness) to self-describe, directly querying with text is non-trivial. To by-pass the limitations of network and query re-writing frameworks, we focus on identifying hidden populations through attributed search. We propose a hierarchical Multi-Arm Bandit (DT-TMP) sampler that uses a decision tree coupled with reinforcement learning to query the combinatorial attributed search space by exploring and expanding along high yielding decision-tree branches. A comprehensive set of experiments over a suite of twelve sampling tasks on three online web platforms, and three offline entity datasets reveals that DT-TMP outperforms all baseline samplers by upto a margin of 54\% on Twitter and 48\% on RateMDs. An extensive ablation study confirms DT-TMP's superior performance under different sampling scenarios. |

Abstract

|

Detection of compromised social media accounts is an important problem as the compromised accounts can be exploited by hackers to spread false and misleading information. In particular, early detection of compromised accounts is essential to mitigating the damages caused by the hackers' posts, which may range from victim shaming to causing widespread public panic and civil unrest. This paper proposes CAUTE, a deep learning framework that simultaneously learns the feature embeddings of the users and their posts in order to identify which, if any, of their posts were written by a different person, i.e. a hacker. Using Twitter as an example of the social media platform, CAUTE learns a tweet-to-user encoder to infer the user features from tweet features and a user-to-tweet encoder to predict the tweet content from a combination of the user features and the tweet meta features. The residual errors of both encoders are then fed into a fully-connected neural network layer to detect whether a post was published by the specified user or by a hacker. Experimental results showed that the features learned by CAUTE are more informative than those generated by conventional representation learning methods. Additionally, CAUTE outperformed several state-of-the-art baseline algorithms in terms of their overall performance and can effectively detect compromised posts early without generating too many false alarms. |

Abstract

|

The relationship extraction and fusion of networks are the hotspots of current research in social network mining. Most previous work is based on single-source data. However, the relationships portrayed by single-source data are not sufficient to characterize the relationships of the real world. To solve this problem, a Semi-supervised Fusion framework for Multiple Network (SFMN), using gradient boosting decision tree algorithm (GBDT) to fuse the information of multi-source networks into a single network, is proposed in this paper. Our framework aims to take advantage of multi-source networks fusion to enhance the accuracy of the network construction. The experiment shows that our method optimizes the structural and community accuracy of social networks which makes our framework outperforms several state-of-the-art methods. |

Abstract

|

Bipartite networks are a well known strategy to study a variety of phenomena. The commonly used method to deal with this type of network is to project the bipartite data into a unipartite weighted graph and then using a backboning technique to extract only the meaningful edges. Despite the wide availability of different methods both for projection and backboning, we believe that there has been little attention to the effect that the combination of these two processes has on the data and on the resulting network topology. In this paper we study the effect that the possible combinations of projection and backboning techniques have on a bipartite network. We show that the 12 methods group into two clusters producing unipartite networks with very different topologies. We also show that the resulting level of network centralization is highly affected by the combination of projection and backboning applied. |

Abstract

|

Discovering communities in complex networks means grouping nodes similar to each other, to uncover latent information about them. There are hundreds of different algorithms to solve the community detection task, each with its own understanding and definition of what a "community" is. Dozens of review works attempt to order such a diverse landscape -- classifying community discovery algorithms by the process they employ to detect communities, by their explicitly stated definition of community, or by their performance on a standardized task. In this paper, we classify community discovery algorithms according to a fourth criterion: the similarity of their results. We create an Algorithm Similarity Network (ASN), whose nodes are the community detection approaches, connected if they return similar groupings. We then perform community detection on this network, grouping algorithms that consistently return the same partitions or overlapping coverage over a span of more than one thousand synthetic and real world networks. This paper is an attempt to create a similarity-based classification of community detection algorithms based on empirical data. It improves over the state of the art by comparing more than seventy approaches, discovering that the ASN contains well-separated groups, making it a sensible tool for practitioners, aiding their choice of algorithms fitting their analytic needs. |

Abstract

|

The 2016 United States presidential election has been characterized as a period of extreme divisiveness that was exacerbated on social media by the influence of fake news, trolls, and social bots. However, the extent to which the public became more polarized in response to these influences over the course of the election is not well understood. In this paper we propose IdeoTrace, a framework for (i) jointly estimating the ideology of social media users and news websites and (ii) tracing changes in user ideology over time. We apply this framework to the last two months of the election period for a group of 47508 Twitter users and demonstrate that both liberal and conservative users became more polarized over time. |

Abstract

|

Research in social network analytics has already extensively explored how engagement on online social networks can lead to observable effects on users’ real-world behavior (e.g., changing exercising patterns or dietary habits), and their psychological states. The objective of our work in this paper is to investigate the flip-side and examine whether engaging in or disengaging from real-world activities would reflect itself in users’ affective processes such as anger, anxiety, and sadness, as expressed in users’ posts on online social media. We have collected data from Foursquare and Twitter and found that engaging in or disengaging from a real-world activity, such as frequenting at bars or stopping going to a gym, have direct impact on the users’ affective processes. In particular, we report that engaging in a routine real-world activity leads to expressing less emotional content online, whereas the reverse is observed when users abandon a regular real-world activity. |

Abstract

|

With the increasing popularity of portable devices with cameras (e.g., smartphones and tablets) and ubiquitous Internet connectivity, travelers can share their instant experience during the travel by posting photos they took to social media platforms. In this paper, we present a new image-driven poetry recommender system that takes a traveler's photo as input and recommends classical poems that can enrich the photo with aesthetically pleasing quotes from the poems. Three critical challenges exist to solve this new problem: i) how to extract the implicit artistic conception embedded in both poems and images? ii) How to identify the salient objects in the image without knowing the creator's intent? iii) How to accommodate the diverse user perceptions of the image and make a diversified poetry recommendation? The proposed iPoemRec system jointly addresses the above challenges by developing heterogeneous information network and neural embedding techniques. Evaluation results from real-world datasets and a user study demonstrate that our system can recommend highly relevant classical poems for a given photo and receive significantly higher user ratings compared to the state-of-the-art baselines. |

Abstract

|

Road traffic accidents are a major challenge in urban transportation systems. An effective countermeasure to address this problem is to accurately forecast the traffic risks in a city before accidents actually happen. Current traffic accident prediction solutions largely rely on accurate data collected from infrastructure-based sensors, which is not always available due to various resource constraints or privacy and legal concerns. In this paper, we address this limitation by exploring social sensing, a new sensing paradigm that uses humans as sensors to report the states of the physical world. In particular, we consider two types of publicly available social sensing data sources: social media data (e.g., traffic posts on Twitter) and open city data (e.g., traffic data from the city web portal). In this paper, we develop the RiskCast, an inductive multi-view learning approach to accurately forecast the traffic risk by exploiting the social sensing data under a principled co-regularization framework. The evaluation results on a real world dataset from New York City show that RiskCast significantly outperforms the state-of-the-art baselines in forecasting the traffic risks in a city. |

Abstract

|

We propose a novel formalization of roles in social networks that unifies the most commonly used definitions of role equivalence. As one consequence, we obtain a single, straightforward proof that role equivalences form lattices. Our formalization focuses on the evolution of roles from arbitrary initial conditions and thereby generalizes notions of relative and iterated roles that have been suggested previously. In addition to the unified structure result this provides a micro-foundation for the emergence of roles. Considering the genesis of roles may explain, and help overcome, the problem that social networks rarely exhibit interesting role equivalences of the traditional kind. Finally, we hint at ways to further generalize the role concept to multivariate networks. |

Abstract

|

How to effectively detect fake news and prevent its diffusion on social media has gained much attention in recent years. However, relatively little focus has been given on exploiting user comments left for posts and latent sentiments therein in detecting fake news. Inspired by the rich information available in user comments on social media, therefore, we investigate whether the latent sentiments hidden in user comments can potentially help distinguish fake news from reliable content. We incorporate users' latent sentiments into an end-to-end deep embedding framework for detecting fake news, named as SAME. First, we use multi-modal networks to deal with heterogeneous data modalities. Second, to learn semantically meaningful spaces per data source, we adopt an adversarial mechanism. Third, we define a novel regularization loss to bring embeddings of relevant pairs closer. Our comprehensive validation using two real-world datasets, PolitiFact and GossipCop, demonstrates the effectiveness of SAME in detecting fake news, significantly outperforming state-of-the-art methods. |

Abstract

|

The penetration of social media has had deep and far-reaching consequences in information production and consumption. Widespread use of social media platforms has engendered malicious users and attention seekers to spread rumors and fake news. This trend is particularly evident in various microblogging platforms where news becomes viral in a matter of hours and can lead to mass panic and confusion. One intriguing fact regarding rumors and fake news is that very often rumor stories prompt users to adopt different stances about the rumor posts. Understanding user stances in rumor posts is thus very important to identify the veracity of the underlying content. While rumor veracity and stance detection have been viewed as disjoint tasks we demonstrate here how jointly learning both of them can be fruitful. In this paper, we propose RumorSleuth, a multi-task deep learning model which can leverage both the textual information and user profile information to jointly identify the veracity of a rumor along with users' stances. Tests on two publicly available rumor datasets demonstrate that RumorSleuth outperforms current state-of-the-art models and achieves up to 14% performance gain in rumor veracity classification and around 6% improvement in user stance classification. |

Abstract

|